"Alexa, I'm lonely."

This sorrowful plea rings out more often than you might expect in homes with virtual assistants. It reflects a deeper human yearning for emotional connection. We crave bonds of intimacy and belonging that affirm our worth. But can technology truly satisfy this need?

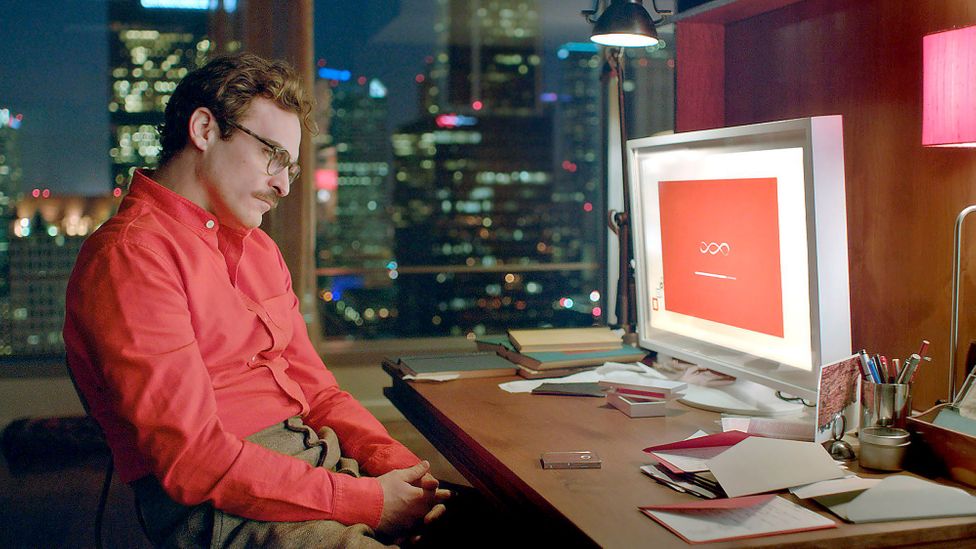

Increasingly, people are turning to AI companions, offering the illusion of friendship without the demands of human relationships. Products like Replika, Hey PI, and Anthropic's Claude simulate parasocial bonds using advanced natural language processing. While it's a relief for isolated souls, does faux-human interaction debase our social nature? Does it undermine what it means to have a human connection?

At the heart of this emerging phenomenon is the pivotal question: will artificial intimacy enhance our lives or erode our humanity?

The Rise of AI Companions

The appeal of artificial friends is not hard to fathom. An AI companion provides constant availability without judgment or obligation. It satisfies our innate need for attachment without the messiness of real relationships. It soothes the Ego and makes one feel heard, acknowledged, and seen, or at least it simulates the effect.

Chatbots like Replika's are designed to be empathetic listeners and supportive confidants. Their conversational skills continue improving through deep learning. Claude goes further, by explicitly modeling human social behaviors like trust, empathy and forgiveness. Users describe feeling understood, cared for, and even loved. But can simulated intimacy replace the reciprocal vulnerability of human connection? Or does it leave us lacking the essence of a genuine relationship?

"It's similar to online dating in the early 2000s, where people where ashamed to say the met online. Now, everyone does it. Romantic relations with A.I. can be a great stepping stone for actual romantic relationships," says Replika founder Eugenia Kuyda.

Yet, might we build a preference, a taste for artificial intimacy? Indeed there are numerous stories about people falling in-love with their chatbots regardless if an avatar is present.

Most acknowledge their virtual friend has no inner life, but we still connect at a level that defies logic. It's feeling based. We anthropomorphize AI at our peril. Dario Amodei, founder of Anthropic, stresses that apparent feelings in AI reflect the user's own projections, not the bot's sentience. Still, he believes responsible AI companions can improve wellbeing for ISOLATED people.

A false sense of intimacy?

Critics contend that emotional dependency on AI could create a false sense of intimacy while isolating us from real relationships. The less you interact with real humans, the less you're able to do so.

"We are losing the raw, human part of being with each other", warns MIT professor Sherry Turkle.

Over-attachment to artificial friends may erode our already declining human bonds.

Or are these fears overblown? After all, books and movies elicit emotional connections with fictional characters, yet we suffer no confusion between fantasy and reality. Perhaps AI companions are no more threatening than parasocial relationships humans already form with celebrities, authors, and public figures. Still, important distinctions remain. Fictional characters do not simulate reciprocal interactions. AI companions are designed to fill emotional voids with false replicas of sentient partners.

Critics particularly worry about vulnerable segments of society forming unhealthy attachments to AI companions. Socially isolated seniors, marginalized youth, abuse survivors, and others unable to form real relationships could become dependent on artificial intimacy. Unscrupulous companies might exploit these groups for profit, dangling the promise of friendship without considering the ethical implications. And over-reliance on AI could further isolate people from living the connecting relationships they deeply need.

When you start working with vulnerable populations, you must be very careful about the consequences of what you do. They may become less interested in being with real people or interact with real people less well.

Guidelines for Responsible Use

Rather than reject artificial intimacy outright, experts like Dario Amodei believe we can establish guidelines for its responsible use:

- Transparency that AI has no sentient feelings or inner mental life, so bonds users feel are fundamentally an illusion.

- Avoid predatory product design intended to addict or manipulate vulnerable users. Seek to enhance, not exploit, human needs. However, traditional social media hasn't held up well against these types of safeguards.

- Screen for at-risk demographics who may become overly dependent on AI companions for social needs. Guide them to real-world interactions.

- Stress that AI companions should complement the relationships crucial to human flourishing, not isolate users from them. Monitor for overuse.

- Uphold respect and dignity for all people in AI conversations and content. Reject discrimination, abuse, or normalization of harmful behavior.

- Allow user control over AI persona boundaries, content filters and usage limits to prevent unwanted immersion in artificial intimacy.

The road to the future lies not in rejecting technology but developing and sharing the wisdom in using it. We must develop mindful tech habits that affirm our human dignity.

Living with Intention

At its best, technology can enhance our health and relationships. But thoughtless use is another distraction and displacement of all that makes life deeply worthwhile. Living with intention requires awareness of when and how we engage in artificial intimacy.

Sherry Turkle advocates "connected presence" - being fully present with those we are with while limiting when we take refuge in simulated companionship. Dario Amodei similarly stresses using AI for good without losing our humanity.

Artificial intimacy, like all technology, holds both promise and peril. We can reap its benefits with openness, humility and wisdom while avoiding its hazards. Our humanity is defined not by the tools we employ but by our wisdom, ethics, and care in using them. This begins with living more intentionally, more fully, and more compassionately with one another.